4 · Dataset Structure

You may download the dataset from apollo 3d car challenges

The folder structure of the 3d car detection challenge is as follows:

{root}/{folder}/{image_name}{ext}

The meaning of the individual elements is:

cameracamera intrinsic parameters.car_modelsthe set of car models, re-saved to python friendly pkl.car_poseslabelled car pose in the image.imagesimage set.splittraining and validation image list.

5 · Scripts

There are several scripts included with the dataset in a folder named scripts

-

demo.ipynbDemo function for visualization of an labelled image -

car_models.pycentral file defining the IDs of all semantic classes and providing mapping between various class properties. -

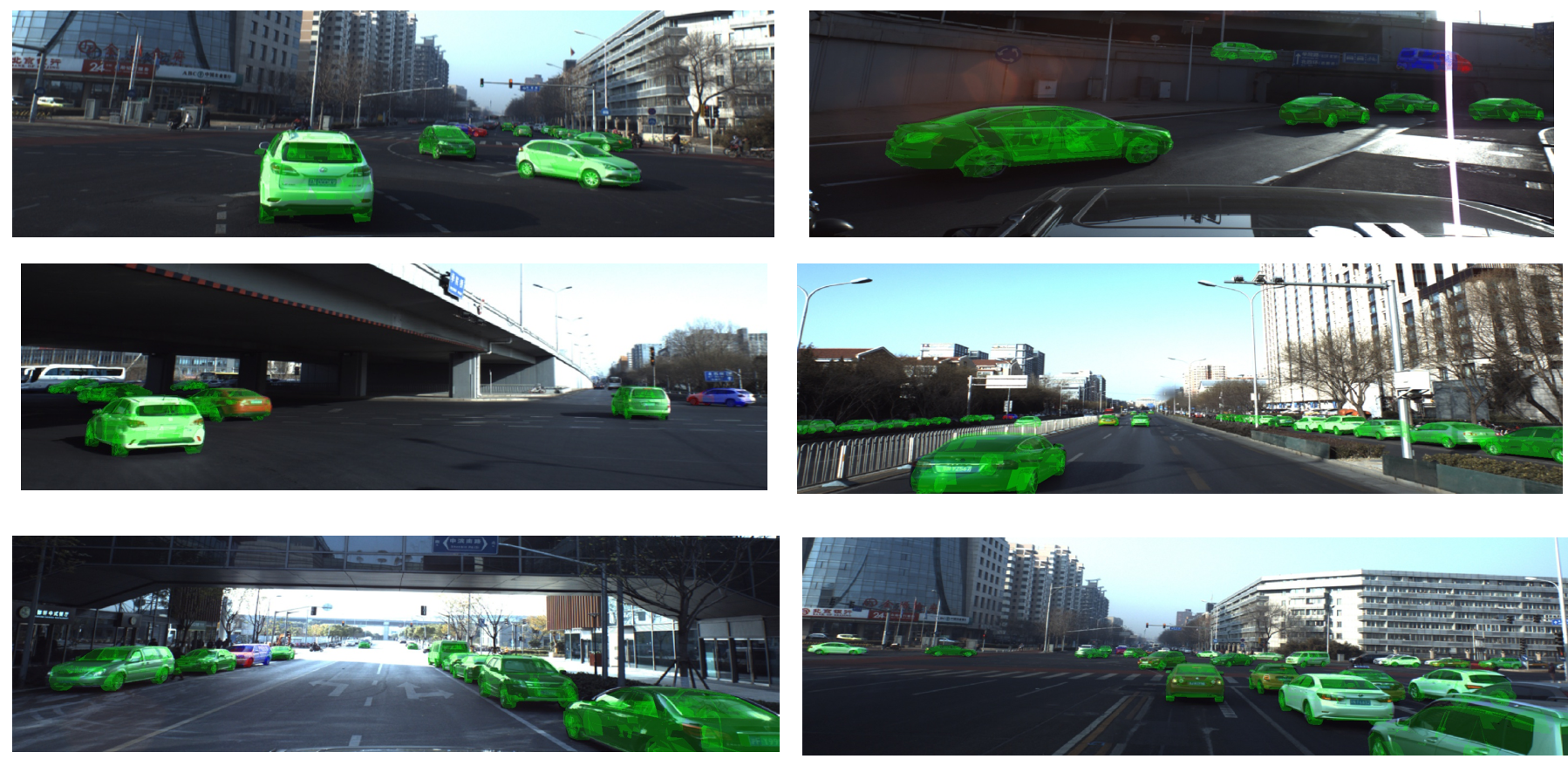

render_car_instances.pyscript for loading image and render image file -

'renderer/' containing scripts of python wrapper for opengl render a car model from a 3d car mesh. We borrow portion of opengl rendering from Displets and change to egl offscreen render context and python api.

-

install.shinstallation script of this library. Only tested for Ubuntu.

The scripts can be installed by running install.sh in the bash: sudo bash install.sh

Please download the sample data from apollo 3d car challenges with sample data button, and put it under

../apolloscape/

Then run the following code to show a rendered results:

python render_car_instances.py --image_name='./test_example/pose_res' --data_dir='../apolloscape/3d_car_instance_sample'

6 · Evaluation

We follow similar instance mean AP evalution with the coco dataset evaluation, while consider thresholds using 3D car simlarity metrics (distance, orientation, shape), for distance and orientation, we use similar metrics of evaluating self-localization, i.e. the Euclidean distance for translation and arccos distance with quaternions representation.

For shape similarity, we consider the reprojection mask similarity by projecting the 3D model to

10 angles and compute the IoU between each pair of models. The similarity we have is

sim_mat.txt

For submitting the results, we require paticipants to also contain a estimated car_id which is

defined under car_models.py and also the 6DoF estimated car pose relative to

camera. As demonstrated in the test_eval_data folder.

If you want to have your results evaluated w.r.t car size, please also include an 'area' field for the submitted results by rendering the car on image. Our final results will based on AP over all the cars same as the coco dataset.

You may run the following code to have a evaluation sample.

python eval_car_instances.py --test_dir='./test_eval_data/det3d_res' --gt_dir='./test_eval_data/det3d_gt' --res_file='./test_eval_data/res.txt'

7 · Metric formula

We adopt the popularly used mean Avergae Precision for object instance evaluation in 3D similar

to coco detection. However

instead of using 2D mask IoU for similarity criteria between predicted instances and ground

truth to judge a true positive, we propose to used following 3D metrics containing the

perspective of shape ( ), 3d translation(

) and 3d rotation(

) to judge a true positive.

Specifically, given an estimated 3d car model in an image and ground truth model

, we evaluate the three estimates repectively as follows:

For 3d shape, we consider reprojection similarity, by putting the model at a fix location and rendering 10 views by rotating the object. We compute the mean IoU between the two masks rendered from each view. Formally, the metric is defined as,

where is a set of camera views.

For 3d translation and rotation, we follow the same evaluation metric of self-localization README.md.

Then, we define a set of 10 thresholds for a true positive prediction from loose criterion to strict criterion:

shapeThrs - [.5:.05:.95] shape thresholds for $s$

rotThrs - [50: 5: 5] rotation thresholds for $r$

transThrs - [2.8:.3:0.1] trans thresholds for $t$

where the most loose metric .5, 50, 2.8 means shape similarity must , rotation distance must

and tranlation distance must

, and the strict metric can be interprated correspondingly.

We use to represent those criteria from loose to strict.

8 · Rules of ranking

Result benchmark will be:

| Method | AP | AP |

AP |

AP |

AP |

AP |

|---|---|---|---|---|---|---|

| Deepxxx | xx | xx | xx | xx | xx | xx |

Our ranking will determined by the mean AP as usual.

9 · Submission of data format

├── test

│ ├── image1.json

│ ├── image2.json

...

Here image1 is string of image name

- Example format of image1.json

[{

"car_id" : int,

"area": int,

"pose" : [roll,pitch,yaw,x,y,z],

"score" : float,

}]

...

Here roll,pitch,yaw,x,y,z are float32 numbers, and car_id is int

number, which indicates the type of car. "area" can be computed from the rendering code provided

by render_car_instances.py by first rendering an image from the estimated set

models and then calculate the area of each instance.